Behavioural Analytics, Privacy and Regulation

08 April 2025

Jump to

For decades, the financial sector has led the way in developing online channels, making it one of the most heavily regulated industries. This blog will explore the key regulations impacting AI, Behavioural Analytics, and Scam prevention, including GDPR, PSD2, the EU AI Act, DORA, and PSD3.

Privacy & Regulation Principles in Behavioural Analytics

Three principles are described multiple times, and in several ways, in these regulations.

Principle 1: Improve Consumer Payment Protection

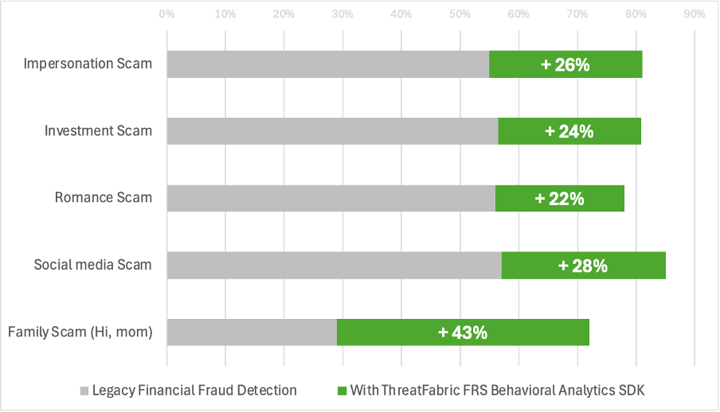

Behavioural Analytics enables financial institutions to detect risk signals when scammers manipulate victims' behaviour. Our previous blog explains this process in detail. The measured improvements in detection demonstrate that consumers are significantly better protected with Behavioural Analytics than without it.

Principle 2: Privacy Preservation and Edge AI

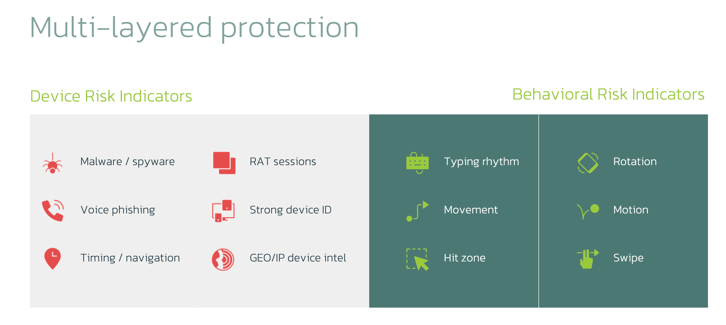

ThreatFabric Behavioural Analytics models preserve privacy in two ways.

- No Personal Identifiable Information (PII) or Financial Information is used in building the models. The models leverage sensors in the mobile and click-and-type telemetry is used for web channels. The models calculate a risk score. This is the data sent to the financial’s risk engine. No PII is collected nor transmitted.

- Keep it on the end-point with Edge AI. ThreatFabric deploys models directly on the mobile. Near Real-time data processing and analysis is performed without reliance on sending date to cloud or SaaS application. The models are developed in such a way, they have minimal performance SDK size impact.

This way, the requirements from GDPR and other regulatory principles are not only met, but exceeded.

Principle 3: Model Transparency

The sensors and data points outlined in Principle 2 provide input to the AI models. The output consists of messages containing risk scores. The methodology behind these risk scores can be examined on the ThreatFabric FRS portal, where you can see which inputs contributed to the risk score. We refer to this principle as:

"Transparency up to the Sensor Level"

Do they get along?

Behavioural Analytics offers superior protection against scams compared to any other technology available today. It's crucial to implement AI in a manner that aligns with Privacy Principles and Regulations, such as GDPR. Historically, there was concern over the opacity of AI models. However, with increasing demands for Model Transparency, understanding how these models operate has become essential for integrating Behavioural Models into your Fraud Prevention strategy.

In conclusion, Privacy, Regulation, and Behavioural Analytics work exceptionally well together – if architected with Privacy Preservation in mind.

For more information on ThreatFabric Behavioural Analytics, see the first blog in this series.

Full list of Regulations and applicability

General Data Protection Regulation (GDPR)

- Effective Date: 2018

- Relevance: Primarily focused on data protection, GDPR requires banks to implement measures to prevent data breaches and fraud.

- Privacy and Consumer Protection: Provides comprehensive data protection and privacy rights for individuals within the EU.

- AI Mentions: AI applications must comply with data protection principles such as purpose limitation and data minimization.

Payment Services Directive 2 (PSD2)

- Effective Date: 2018

- Relevance: Enhances security measures for electronic payments and requires strong customer authentication to prevent fraud.

- Privacy and Consumer Protection: Improves consumer protection and security for payment transactions.

- AI Mentions: AI is used to enhance payment security and fraud detection.

EU AI Act

- Effective Date: 2024

- Relevance: Provides a framework for regulating AI systems and general-purpose models.

- Privacy and Consumer Protection: Ensures transparency, data protection, and human oversight in AI applications.

- AI Mentions: Establishes rules for AI systems and models based on risk levels, including high-risk AI systems, including provisions for Transparency and Disclosure.

Digital Operational Resilience Act (DORA)

- Effective Date: 2025

- Relevance: Aims to strengthen the IT security of financial entities and ensure resilience.

- Privacy and Consumer Protection: Complements existing EU data protection rules, focusing on ICT risk management and cybersecurity.

- AI Mentions: Does not specifically mention the use of AI for consumer protection.

Payment Services Directive 3 (PSD3)

- Effective Date: Expected 2027

- Relevance: Builds on PSD2 to enhance consumer protection, promote open banking, and improve transparency in cross-border payments.

- Privacy and Consumer Protection: Includes stronger security measures and transparency in transactions.

- AI Mentions: AI is used for real-time fraud detection and information sharing